Indian enterprises generate massive volumes of voice data every day through contact centres, IVR systems, field operations, and voice-enabled platforms. Yet much of this data remains unusable because real-world speech is multilingual, accented, noisy, and highly contextual across Indian markets. With 57% of urban internet users preferring regional languages, monolingual voice systems fail to reflect how India actually speaks.

For product and technology leaders, the challenge is not capturing voice but converting it into accurate, system-ready intelligence. Mixed-language conversations, dialect variation, low-fidelity audio, and integration gaps routinely break basic speech recognition systems, resulting in fragmented workflows, missed insights, and inconsistent customer experience.

In this blog, you’ll explore multilingual speech recognition trends shaping Indian enterprises, covering code-switching realities, deployment complexity, and why scalable, integration-ready ASR has become essential today.

At a Glance

- Multilingual processing is essential: Enterprises must handle Hindi, English, and regional languages accurately to support large-scale operations.

- Code-switching needs context-aware ASR: Mixed-language conversations require domain-adapted models and continuous learning pipelines.

- Real-world audio is challenging: Noise, low-bitrate calls, and field recordings demand robust audio preprocessing and far-field recognition.

- Integration unlocks insights: Embedding ASR into CRM, analytics, and workflows converts voice data into actionable intelligence.

- Scalable, low-latency systems are critical: Streaming pipelines, edge inference, and hybrid deployments ensure real-time performance across India’s linguistic diversity.

Evolution of Speech Recognition Technology

Speech recognition has progressed from rigid, rule-based systems to machine learning models capable of understanding natural, continuous speech. Early engines struggled with accents, structured commands, and real-world variability, limiting their enterprise usability. Today, over 58% of smartphone users actively use voice commands, and nearly 40% of global contact centres rely on ASR for multilingual, real-time customer engagement.

Modern architectures use neural networks and end-to-end deep learning to optimise acoustic and language models simultaneously. They support streaming and batch transcription, contextual understanding, and domain-specific adaptation, handling noisy and low-fidelity audio across contact centres, IVR systems, and mobile applications.

This evolution highlights why enterprises in India now need multilingual speech recognition that accurately captures code-switched, regional, and mixed-language interactions.

Why Multilingual Speech Recognition Matters?

India’s linguistic diversity creates a complex environment for enterprise voice interactions. Customers frequently switch between Hindi, English, and regional languages within the same conversation, making accurate transcription a critical requirement for digital platforms.

For enterprises, this complexity translates into operational and analytical challenges:

- High call volumes across multiple languages: Contact centres and IVR systems must manage interactions in Hindi, Tamil, Telugu, Marathi, and other languages simultaneously.

- Inconsistent transcription across dialects: Regional variations reduce recognition accuracy if models are not trained for local speech patterns.

- Limited scalability for voice data analysis: Large volumes of multilingual audio require real-time processing pipelines to extract actionable insights.

- Manual effort in multilingual support: Without reliable ASR, teams spend excessive time transcribing, translating, and analysing voice interactions.

- Reduced accessibility for regional-language users: Poor recognition systems can exclude users who are not comfortable with English-only interfaces.

Looking to turn multilingual voice data into actionable insights across your enterprise workflows? Explore Reverie Speech-to-Text with real-time streaming, domain-aware models, and seamless integration. Get started today!

Also Read: How Reverie’s Speech-to-Text API is Reshaping Businesses in India

Modern multilingual speech recognition systems address these issues by providing real-time, context-aware transcription, supporting enterprise-specific vocabulary, and integrating seamlessly into workflows, enabling organisations to turn voice data into operational intelligence.

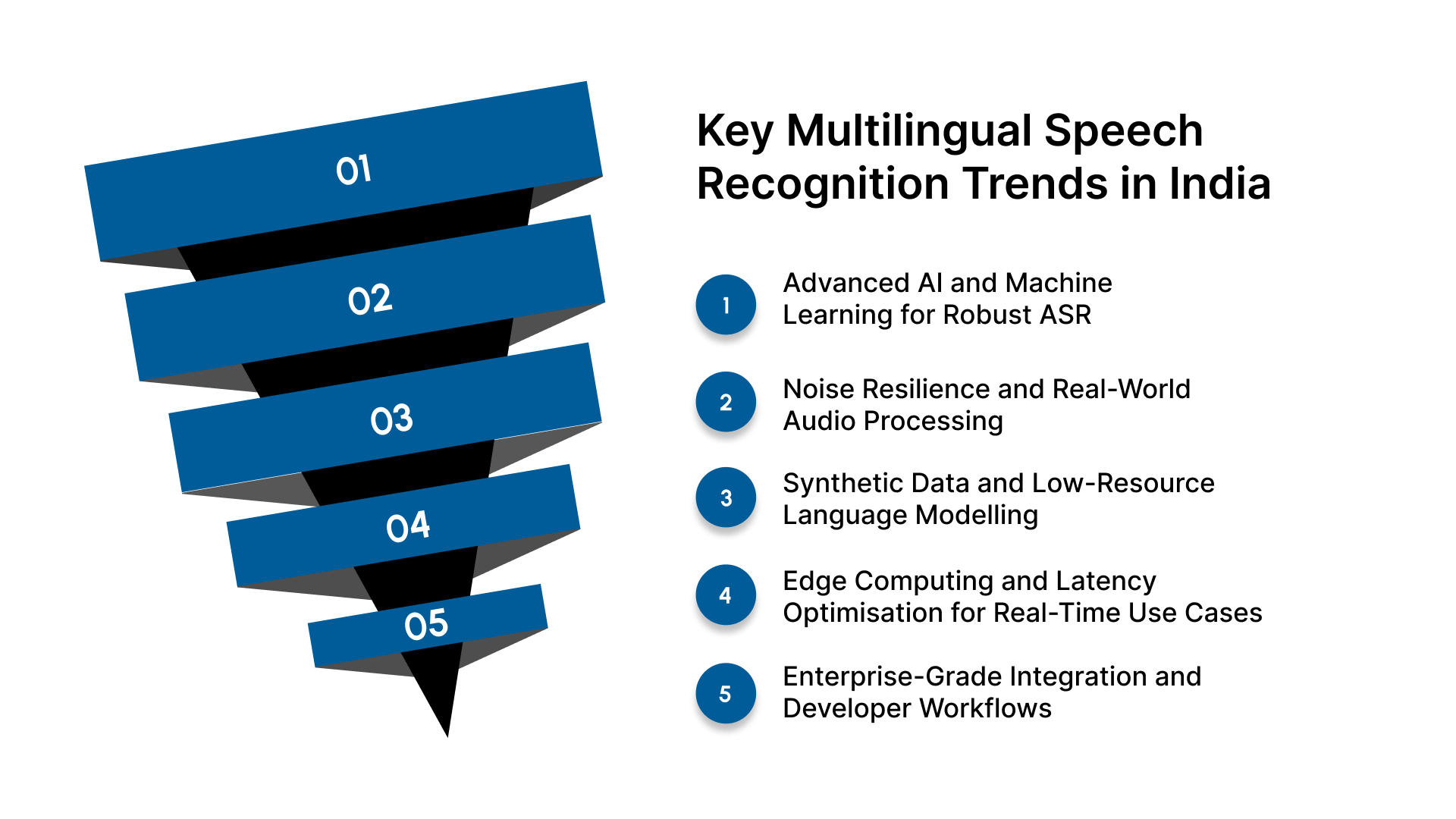

Key Multilingual Speech Recognition Trends in India

Multilingual speech recognition has become a core digital infrastructure for enterprises operating in linguistically diverse markets like India. The following trends highlight how multilingual speech recognition is being designed and deployed for Indian enterprise environments:

1. Advanced AI and Machine Learning for Robust ASR

Neural network-driven architectures have replaced traditional acoustic models across most enterprise deployments. These models are trained to understand conversational speech patterns rather than scripted commands.

Key technical advances shaping multilingual ASR include:

- End-to-end neural architectures that jointly optimise acoustic and language modelling

- Domain-adapted language models trained on enterprise-specific terminology

- Context-aware decoding for conversational speech

- Continuous learning pipelines to improve recognition quality over time

Real-world Example: IRCTC’s AskDISHA 2.0 AI assistant supports voice and text interactions in multiple Indian languages, including Hindi, Hinglish, and English, enabling users to book tickets, check PNR status, cancel bookings, and track refunds using natural language commands on the official mobile app and website. The voice‑enabled interface simplifies access for users across India’s diverse linguistic demographics.

For Indian enterprises, these advances directly improve how systems handle regional accents, dialect variation, and mixed-language conversations.

2. Noise Resilience and Real-World Audio Processing

Enterprise speech data is rarely captured in controlled environments. Call centre recordings, IVR interactions, field audio, and mobile conversations often contain background noise, compression artefacts, and inconsistent microphone quality.

Modern ASR platforms now incorporate:

- Noise suppression and echo cancellation

- Far-field speech recognition for hands-free environments

- Adaptive gain control for variable audio sources

- Signal enhancement for low-bitrate telephony audio

Real-world Example: Bharti Airtel has deployed AI‑powered speech analytics solutions in collaboration with NVIDIA to improve customer service across its large contact centre operations. The system processes a high volume of inbound calls, using automated speech recognition to analyse conversations and drive insights that improve agent performance and user experience across language interactions.

These capabilities allow enterprises to deploy speech recognition reliably across call centres, branch networks, logistics operations, and field workflows.

3. Synthetic Data and Low-Resource Language Modelling

Many Indian languages remain low-resource in terms of labelled speech datasets. This makes conventional data collection and annotation expensive and slow.

To address this, ASR platforms increasingly rely on:

- Synthetic speech generation to expand training corpora

- Transfer learning from high-resource to low-resource languages

- Cross-lingual model training for improved generalisation

- Active learning pipelines to continuously refine language models

Real-world Example: Under India’s Bhashini mission, IISc and ARTPARK are open‑sourcing 16,000+ hours of speech data across 22 Indian languages and multiple dialects from 773 districts. This diverse dataset enables developers to train and benchmark multilingual ASR models without high data costs.

These techniques enable enterprises to deploy speech recognition across languages such as Marathi, Kannada, Malayalam, Odia, and Assamese without waiting for massive annotated datasets.

4. Edge Computing and Latency Optimisation for Real-Time Use Cases

As voice becomes a real-time interaction layer, latency has become a critical performance metric. IVR flows, conversational bots, and live analytics systems require transcription responses within milliseconds to maintain a natural experience.

Modern ASR architectures now support:

- Streaming speech-to-text with sub-second latency

- Edge inference for bandwidth-constrained environments

- Hybrid cloud and on-prem deployments for regulated industries

- Horizontal scalability for peak traffic handling

Real-world example: Indian Railways’ RailMadad 2.0 portal processes over 12 lakh grievances in 90 days across 16 Indian languages, enabling real-time ticketing and service queries. Low-latency ASR pipelines ensure smooth, error-tolerant interactions at a national scale.

5. Enterprise-Grade Integration and Developer Workflows

Speech recognition is no longer deployed as a standalone service. It is embedded into complex digital ecosystems that include CRM systems, ticketing platforms, analytics engines, workflow automation tools, and data lakes.

Current trends in enterprise ASR integration include:

- API-first architectures for rapid application integration

- SDK support for web, mobile, and server-side environments

- Event-driven streaming pipelines for real-time processing

- Native connectors for analytics and data platforms

Real-world Example: Flipkart uses voice interfaces across its customer support and seller platforms to handle order queries and operational workflows in regional languages. These systems integrate speech recognition directly into backend order management and CRM platforms.

Curious how Indian-language speech can enhance customer interactions and analytics? Use Reverie Speech-to-Text for accurate, scalable multilingual transcription across apps, IVR, and workflows. Start today with free API credits and hands-on SDKs!

Also Read: Power of Speech to Text API: A Game Changer for Content Creation

These integration-ready capabilities allow enterprises to embed multilingual speech recognition seamlessly into CRM systems, analytics platforms, and operational workflows. Building on these trends, we now explore how voice-first strategies are shaping a multilingual, digitally connected India and what enterprises must consider to stay ahead.

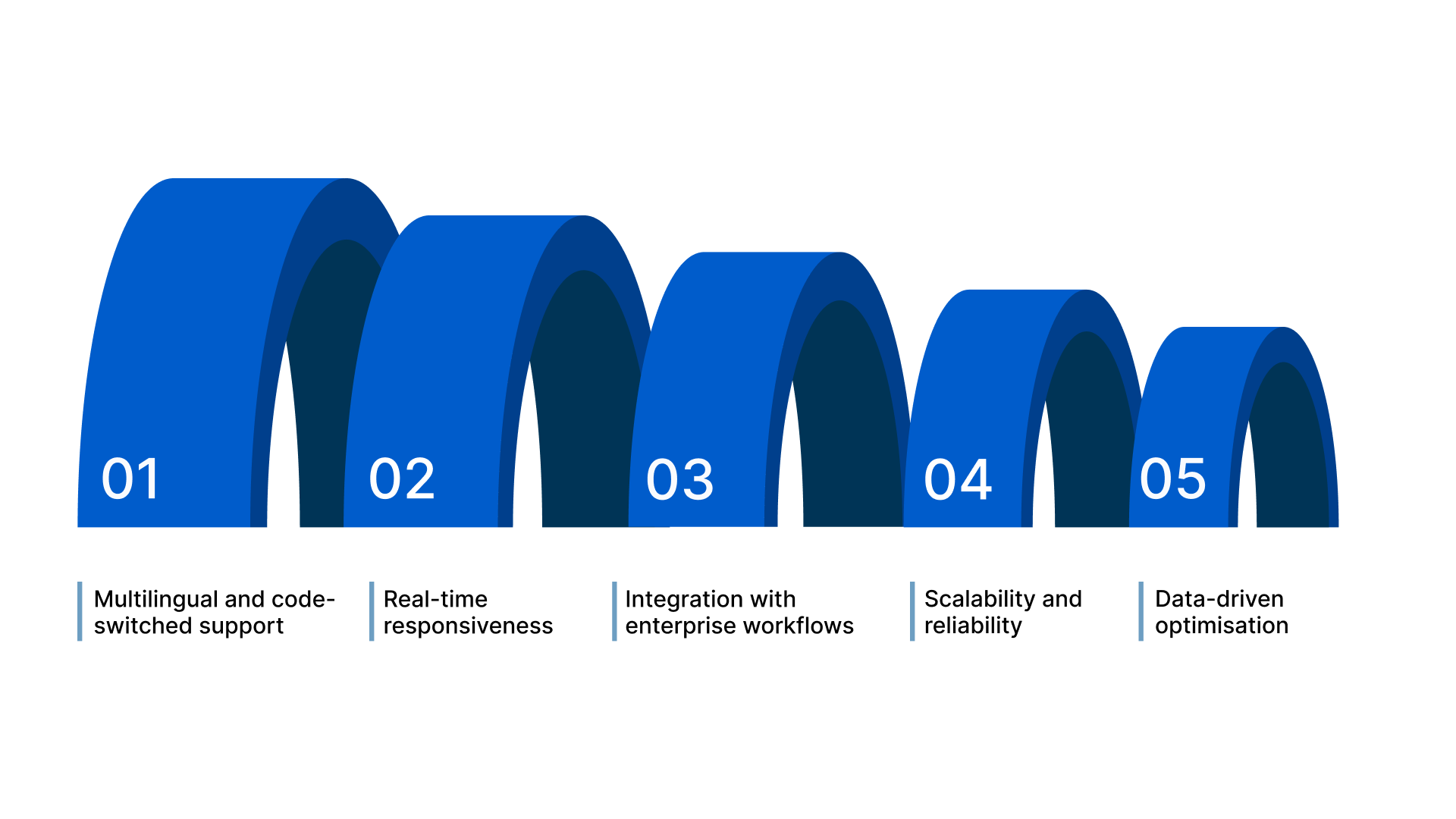

How Are Indian Enterprises Adopting Multilingual Voice?

As India’s digital ecosystem grows, enterprises are integrating multilingual speech recognition into apps, IVR systems, and digital platforms. Indian-language‑optimised ASR enables real-time, context-aware interactions, helping organisations improve operational efficiency, customer experience, and scalability. Key enterprise considerations include:

- Multilingual and code-switched support: Systems must accurately handle Hindi, Tamil, Telugu, Marathi, and mixed-language conversations to serve diverse audiences.

- Real-time responsiveness: Low-latency speech-to-text pipelines ensure natural, uninterrupted voice interactions across web, mobile, and IVR platforms.

- Integration with enterprise workflows: Voice interfaces should seamlessly connect with CRM systems, analytics platforms, and operational tools to deliver actionable insights.

- Scalability and reliability: Platforms must handle millions of simultaneous voice interactions, filter out background noise, and maintain transcription accuracy under variable conditions.

- Data-driven optimisation: Continuous model updates and contextual adaptation help enterprises refine recognition accuracy and operational efficiency over time.

Enterprises that embed these capabilities gain a measurable advantage in customer satisfaction, operational efficiency, and data intelligence. Multilingual speech recognition is no longer a distant trend; it is a strategic necessity for building voice-first, digitally inclusive services across India.

Final Thoughts

Enterprises in India must capture and process multilingual, code-switched voice data accurately at scale. Integration readiness, low-latency processing, and continuous language adaptation are key to turning voice interactions into reliable, actionable intelligence across diverse digital platforms.

Reverie Speech-to-Text meets these enterprise needs with Indian-language‑optimised ASR, supporting real-time streaming and batch transcription, domain-aware models, and seamless workflow integration. Organisations can embed voice capabilities into IVR systems, apps, and analytics platforms while maintaining accuracy across regional and mixed-language conversations.

Sign up now to see how Reverie can help scale your voice-first initiatives and convert multilingual speech into operational advantage.

FAQs

1. How does code-switching impact transcription accuracy in Indian enterprises?

Code-switching between English and regional languages introduces contextual ambiguity, increasing error rates in standard ASR. Enterprises require models trained on mixed-language corpora and domain-specific vocabulary to maintain accurate, real-time transcription across customer interactions.

2. Can multilingual ASR support field operations in rural or low-bandwidth regions?

Yes, modern ASR platforms with edge computing and adaptive streaming can process low-bitrate audio. This ensures voice-based workflows remain operational in rural areas, despite network fluctuations or limited connectivity, without compromising transcription reliability.

3. How do enterprises measure ROI from multilingual speech recognition?

ROI is measured through improved customer satisfaction, reduced call handling time, enhanced operational efficiency, and actionable voice analytics. Enterprises also track adoption metrics across regional language interactions to quantify scale and system performance.

4. What are the security considerations for enterprise voice data in India?

Enterprises must ensure data encryption, secure storage, and compliance with Indian privacy regulations, such as the PDPB. Role-based access, audit trails, and anonymisation of sensitive voice data safeguard user information while enabling analytics at scale.

5. How can enterprises continuously improve ASR accuracy for regional languages?

Continuous improvement relies on active learning pipelines, user feedback loops, and synthetic data augmentation. Enterprises update their models periodically to reflect dialect variations, emerging terms, and code-switched speech patterns to maintain sustained accuracy.