Voice interactions power core enterprise systems in India, yet much of this data remains inaccessible due to inaccurate transcription. With India’s internet user base projected to exceed 900 million in 2025 and 98% accessing content in Indic languages, generic speech recognition struggles with dialects, code-switching, and noisy audio, disrupting enterprise analytics and workflows.

When transcriptions are inaccurate or unavailable, enterprises lose operational intelligence, degrade IVR and support outcomes, and weaken automation and search across apps, bots, and voice systems. These gaps directly impact business decisions, compliance processes, and customer outcomes in multilingual contexts.

In this blog, you’ll see why enterprise speech recognition must deliver precise Indian language transcription integrated into digital workflows.

Key Takeaways

- What It Is: Speech recognition is a technology that converts spoken words into text, powering IVR, apps, and enterprise voice platforms.

- Supports Indian Languages & Mixed Speech: Accurate recognition of multiple Indian languages and code-switched inputs requires specialised ASR models.

- Enables Data-Driven Decisions: Real-time and batch transcription power analytics, automation, and compliance processes.

- Accuracy Determines ROI: Precise transcription enhances customer experience, workflow efficiency, and business intelligence.

- Enterprise-Grade Readiness: Requires APIs, SDKs, secure infrastructure, and scalable deployment for reliable integration.

What is a Speech Recognition System?

A speech recognition system is an enterprise-grade technology that converts spoken audio into structured, machine-readable text using automatic speech recognition (ASR). In business environments, it operates across real-time voice streams and recorded audio files from IVR systems, contact centres, mobile apps, web platforms, and voice bots.

Unlike consumer dictation tools, enterprise speech recognition integrates directly into digital workflows through APIs and SDKs. It supports multilingual speech, speaker identification, keyword detection, analytics pipelines, and domain-specific vocabulary, enabling organisations to transform unstructured voice data into actionable business intelligence.

Now that the foundation is clear, let’s examine why speech recognition technology has become mission-critical for Indian enterprises.

Why Speech Recognition Systems Matter for Indian Enterprises

India’s digital economy spans numerous languages, including Hindi, Tamil, Telugu, Marathi, Bengali, Gujarati, Kannada, Malayalam, and Punjabi. Without reliable speech recognition, this multilingual voice data remains fragmented and unusable. Here are a few key business drivers:

- Multilingual Customer Engagement: Enables access to regional languages across IVR systems, mobile apps, and voice platforms.

- Operational Intelligence: Converts call recordings and voice interactions into searchable, actionable data.

- Automation and Workflow Integration: Powers bots, CRM systems, ticketing platforms, and analytics pipelines.

- Compliance and Audit Readiness: Creates searchable records for regulatory and legal requirements.

For Indian enterprises, speech recognition is a vital layer of digital infrastructure, and exploring how it works will reveal how large-scale, multilingual voice data is efficiently processed.

How a Speech Recognition System Works?

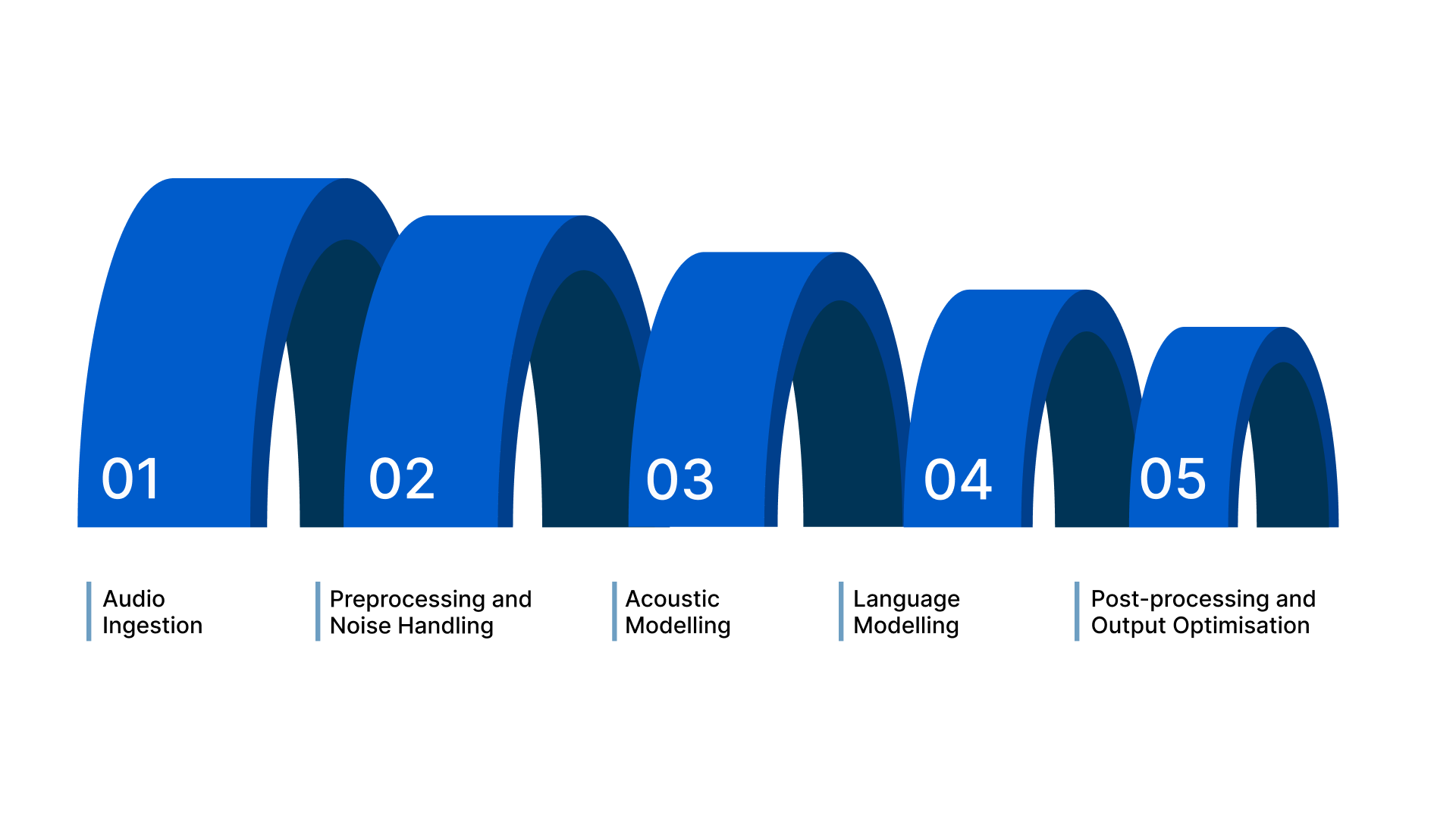

Enterprise speech recognition operates through a structured, multi-stage processing pipeline designed for accuracy, low latency, and scalability across diverse, real-world audio environments. Core processing stages include:

1. Audio Ingestion

The system accepts live streams from IVR, telephony, web, and mobile apps, as well as call recordings and uploaded media files. It handles various formats, sampling rates, and network conditions while maintaining real-time processing for immediate analysis.

2. Preprocessing and Noise Handling

Incoming audio is normalised, background noise is filtered, and silent segments are removed. Low-fidelity channels, such as compressed IVR or mobile calls, are enhanced using signal processing techniques to preserve speech clarity without introducing latency.

3. Acoustic Modelling

The audio waveform is converted into phonetic or sub-word representations. Advanced models trained on Indian languages capture tonal, regional, and accent-specific variations, allowing the system to recognise even heavily code-switched speech such as Hindi–English or Tamil–English.

4. Language Modelling

Phonetic sequences are mapped to words using probabilistic and domain-specific language models. Contextual models ensure that enterprise vocabulary, such as financial terms, medical jargon, or product names, is accurately transcribed, reducing errors in business-critical workflows.

5. Post-processing and Output Optimisation

The system applies punctuation, detects keywords, identifies individual speakers in multi-party conversations, and outputs structured text suitable for analytics, compliance, or workflow automation. It also generates confidence scores, enabling enterprises to flag uncertain transcriptions for review.

Also Read: Speech SDK: Developer’s Guide to Speech Recognition

This pipeline enables enterprises to reliably process millions of minutes of voice data across languages and audio conditions. With the system architecture understood, the next step is to identify which features truly matter at enterprise scale.

Key Speech Recognition Features That Drive Impact

The right platform handles linguistic diversity, complex workflows, and enterprise reliability, directly affecting transcription accuracy and the value derived from voice data. Here are a few must-have enterprise capabilities:

- Multilingual and Mixed-language Support: Accurately transcribes Hindi, Tamil, Telugu, Marathi, Bengali, and mixed-language speech (e.g., Hindi-English or Tamil-English), capturing regional dialects and accents.

- Real-time and Batch Transcription: It processes live voice streams from IVR and applications while also supporting large-scale historical audio for analytics, compliance, and archival purposes.

- Domain-customised Language Models: Industry-specific vocabulary for BFSI, healthcare, legal, and automotive domains is reliably recognised, ensuring critical terms are transcribed accurately.

- Keyword Spotting and Profanity Filtering: The system detects specific terms to monitor, support regulatory compliance, and ensure brand safety across customer interactions.

- Speaker Identification: Multiple participants in meetings, call centres, and multi-party IVR systems are distinguished to generate structured insights.

- API and SDK Integration: Enterprise workflows are supported with seamless embedding into Android, iOS, web platforms, and backend systems, enabling rapid deployment and automation.

Also Read: Power of Speech to Text API: A Game Changer for Content Creation

With these capabilities established, the next step is to explore practical enterprise use cases where speech recognition delivers measurable impact.

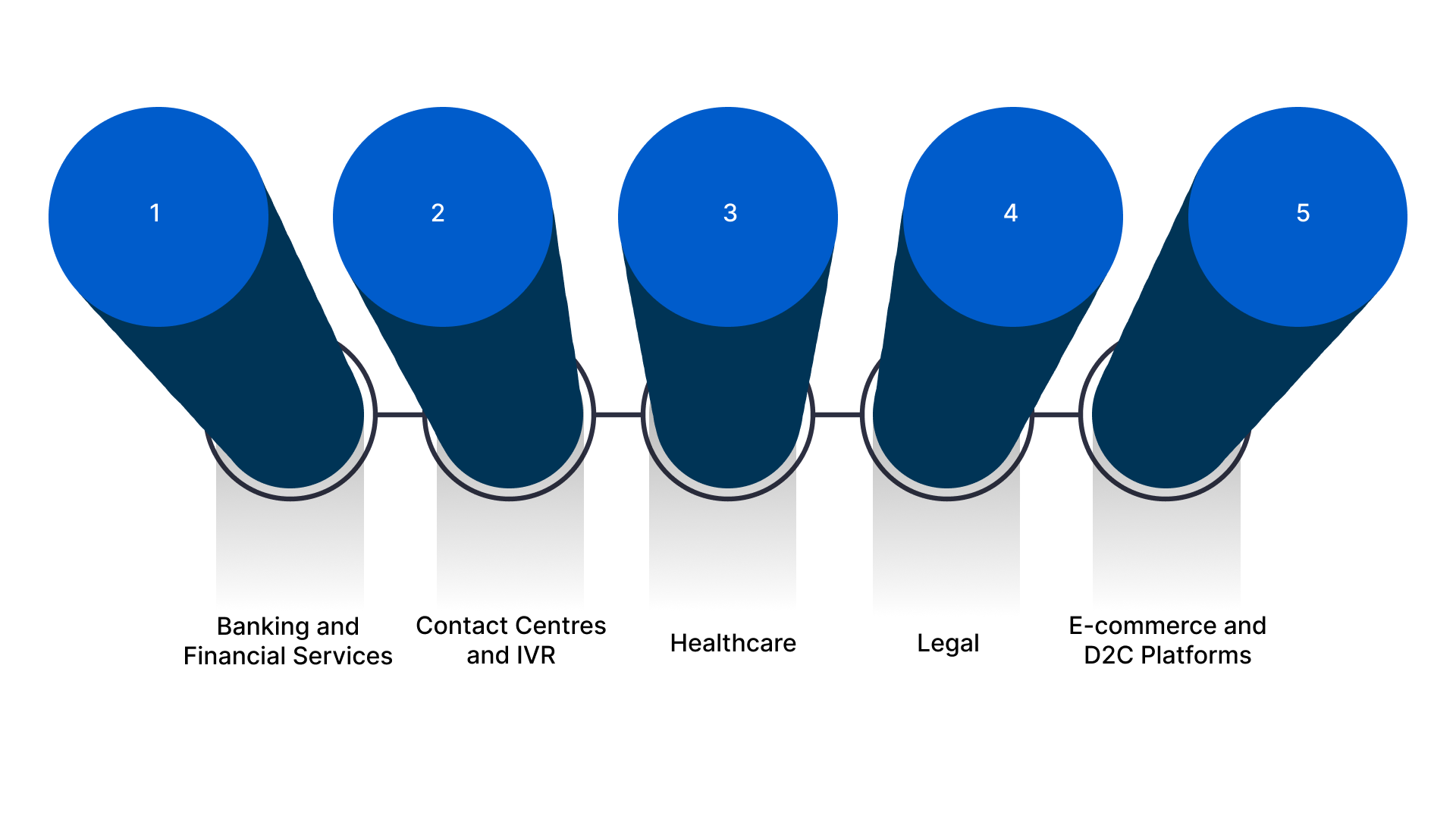

Speech Recognition Use Cases and Applications

Speech recognition enables a wide range of high-impact enterprise use cases across Indian industries. Let’s take a closer look:

1. Banking and Financial Services

Enterprises can process voice-based queries, authenticate transactions, and monitor compliance in multiple Indian languages.

Example: Indian banks are adopting multilingual voice AI assistants and voice-based support to improve accessibility for users of regional languages. This highlights the strong demand for speech recognition in banking workflows.

2. Contact Centres and IVR

Automatic transcription, intent detection, sentiment analysis, and quality monitoring reduce human dependency while improving response times.

Example: Airtel runs an automated speech recognition on 84% of inbound calls, enabling faster query resolution and enhanced customer satisfaction.

3. Healthcare

Transcription of doctor-patient interactions, clinical notes, and consultations improves documentation quality and reduces administrative workload.

Example: Hospitals in India are increasingly using ASR to capture patient details in real time, supporting multilingual interactions across staff and patients.

4. Legal

Court proceedings and legal documentation benefit from real-time transcription and accurate multilingual records.

Example: Delhi Courts piloted a hybrid courtroom with speech-to-text capabilities, while the Supreme Court uses AI transcription to produce instant text versions of oral arguments, ensuring precise and efficient documentation.

5. E-commerce and D2C Platforms

Voice commands enable search, product discovery, and order handling in regional languages, improving accessibility and user experience.

Example: Meesho’s generative AI-powered voice bot handles approximately 60,000 daily calls, transcribing queries in multiple languages to facilitate order processing and customer support.

If your business needs a reliable speech-to-text solution, Reverie’s Speech-to-Text API is built to help. It supports real-time transcription across multiple Indian languages and dialects. Get started today!

However, deploying speech recognition at scale is not without its challenges. Let’s examine what enterprises must solve.

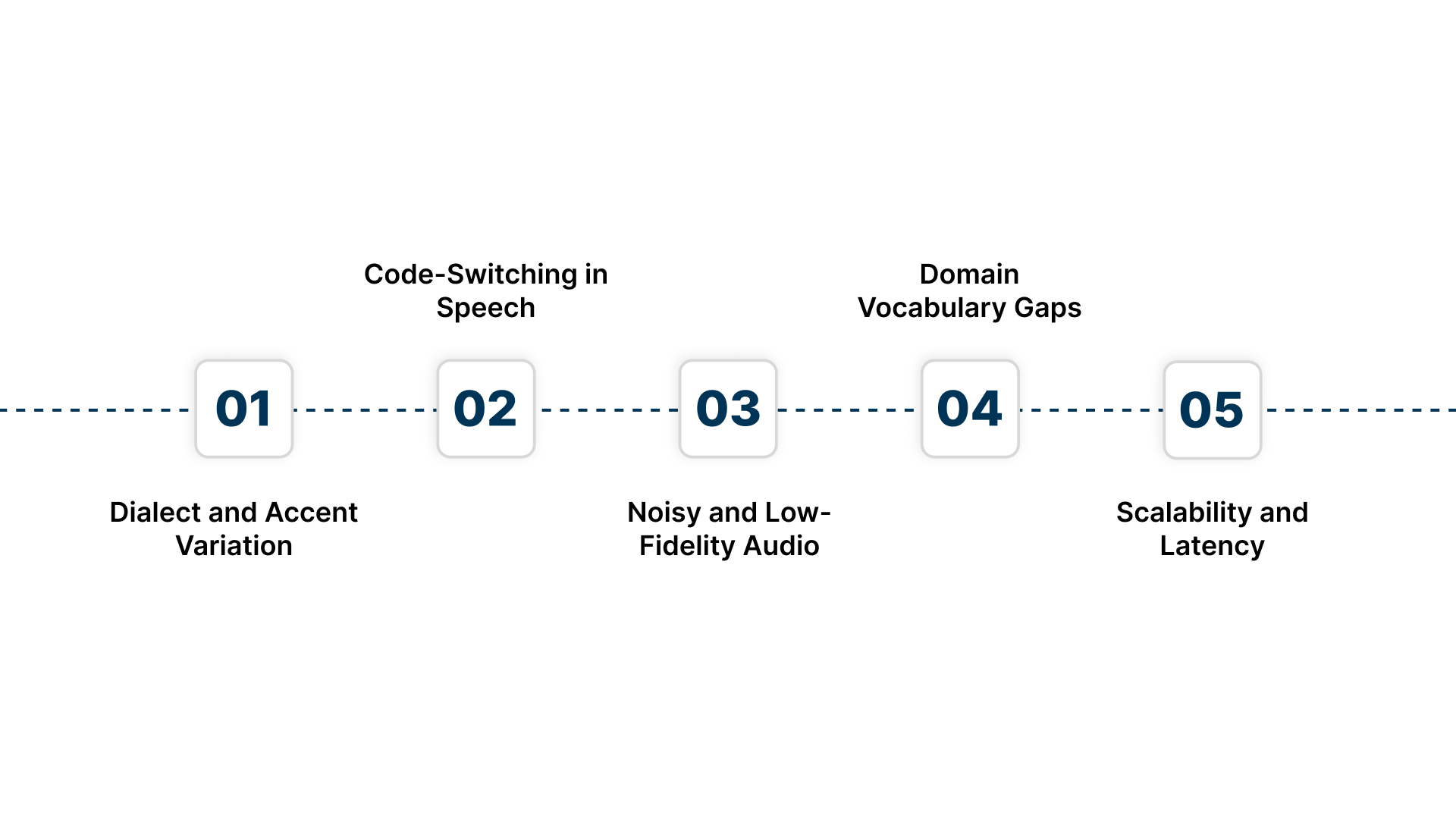

Common Speech Recognition Challenges and Solutions

While speech recognition delivers significant value, real-world enterprise deployment introduces technical and operational challenges. Addressing these issues is critical for accurate, scalable, and multilingual transcription.

1. Dialect and Accent Variation: Indian languages have numerous regional accents and pronunciation differences, which can reduce transcription accuracy.

Solution: Train acoustic and language models specifically on India’s regional accents and dialects. Regularly update models with localised speech samples to maintain high recognition accuracy across diverse user groups.

2. Code-Switching in Speech: Many Indian speakers mix languages, such as Hindi-English or Tamil-English, within a single sentence, confusing standard models.

Solution: Implement mixed-language language models capable of recognising code-switched speech. Use dynamic language detection and context-aware algorithms to maintain seamless transcription across language boundaries.

3. Noisy and Low-Fidelity Audio: Call centres, IVR systems, and mobile recordings often contain background noise or low-quality audio, leading to misinterpretation.

Solution: Apply noise-robust preprocessing, such as spectral subtraction and adaptive filtering. Fine-tune models for low-fidelity audio and IVR environments to preserve transcription accuracy under challenging conditions.

4. Domain Vocabulary Gaps: Generic models struggle with specialised terms in banking, legal, healthcare, or automotive domains.

Solution: Train custom language models with domain-specific vocabularies. Continuously incorporate business-specific terminology and acronyms to ensure accurate recognition of technical or regulatory content.

5. Scalability and Latency: High call volumes or batch processing can overwhelm systems, leading to delayed or failed transcriptions.

Solution: Deploy a streaming architecture and distributed processing. Optimise model inference with GPU acceleration and parallelisation to handle millions of minutes of audio in real time while maintaining low latency.

Also Read: Power of Speech to Text API: A Game Changer for Content Creation

With the challenges addressed, let’s explore how a reliable solution like Reverie’s Speech-to-Text API improves workflow efficiency, transcription accuracy, and the integration of multilingual voice data.

How Reverie’s Speech-to-Text API Enhances Your Workflow?

An effective speech recognition system only delivers value when organisations can convert voice into actionable insights. Reverie’s Speech-to-Text API extends this capability, enabling your business to quickly transcribe audio from meetings, phone calls, podcasts, live streams, and other voice data.

Here’s how it enhances workflow efficiency, accuracy, and multilingual integration:

- Transcription in 11 Indian Languages: Seamlessly convert conversations, including regional or mixed-language content, into correctly punctuated text.

- Real-time and Batch Processing: Monitor calls live or process large volumes of audio later, giving you flexibility in how you analyse voice data.

- Voice Typing and Command Support: Enable users to create text by speaking or to invoke actions via voice commands.

- Smooth Integration and Developer Support: Use APIs or SDKs with clear documentation and a testing playground to integrate speech transcription into your CRM, contact center, or internal systems.

- Data Security & Privacy Compliance: Reverie encrypts data and adheres to strong privacy standards, essential for sectors such as legal, healthcare, and finance.

| Clients have reported measurable results, including a 60% increase in engagement, 2.5× growth in lead generation, a 62% reduction in operational costs, and a 52% rise in CSAT. |

These results demonstrate that Reverie improves transcription accuracy while enhancing workflow efficiency and delivering measurable business outcomes.

Conclusion

A speech recognition system is a core technology for Indian enterprises, converting voice interactions into structured data to power automation, analytics, compliance, and multilingual customer engagement. Accurate transcription is critical for operating voice-driven platforms effectively in India’s linguistically diverse digital environment.

Reverie’s Speech-to-Text API integrates seamlessly into your digital ecosystem, supporting both cloud and on-premises deployments for scalability and flexibility. Its features include keyword spotting, profanity filtering, sentiment analysis, and smart analytics, all designed to enhance workflow efficiency and user experience.

Ready to utilise your speech recognition system for accurate, real-time voice insights? Sign up now and see how it can scale across your workflows.

FAQs

1. How accurate are speech recognition systems for Indian languages?

Modern enterprise speech recognition systems trained on Indian language data achieve high accuracy across Hindi, Tamil, Telugu, Marathi, and Bengali. Accuracy varies based on audio quality, domain-specific vocabulary, and regional dialect coverage.

2. Can speech recognition work on IVR and call centre recordings?

Yes. Enterprise speech recognition systems are designed for low-fidelity telephony audio and noisy call centre environments, enabling accurate transcription, keyword detection, and compliance monitoring.

3. Is speech recognition suitable for regulated industries like banking?

Yes. Enterprise ASR platforms support on-premise deployment, encryption, access controls, and audit logging to meet BFSI and regulatory compliance requirements.

4. How does speech recognition handle mixed-language conversations?

Mixed-language, or code-switched, speech is handled using specialised language models trained on Indian conversational patterns, such as Hindi-English and Tamil-English.

5. Can speech recognition integrate with CRM and analytics systems?

Enterprise ASR platforms integrate via APIs and SDKs into CRM, ticketing, analytics, and automation platforms, enabling real-time and batch processing of voice data.