Did you know the speech-to-text market in India is projected to reach $1,106.9 million by 2030? This growth reflects how rapidly Indian enterprises across e-commerce, healthcare, education, and legal services are adopting speech technologies to handle increasing volumes of multilingual voice data and real-time interactions.

Yet, despite this momentum, enterprises generate massive volumes of voice data every day that remain underutilised. Manual workflows, slow turnaround times, and accuracy gaps prevent organisations from converting spoken interactions into timely, actionable insights.

In this blog, you’ll discover how the future of speech recognition enables accurate, scalable, real-time transcription to support enterprise automation, compliance, and informed decision-making.

Key Takeaways

- Multilingual ASR is Essential: Enterprise systems must accurately handle multiple languages, dialects, and code-switching to operate across diverse markets.

- Rich Outputs Enable Action: Metadata such as sentiment, speaker roles, and semantic tags allow integration with analytics, search, and compliance systems.

- Scalability Drives Efficiency: Real-time transcription at scale converts audio and video into searchable, actionable data across business units.

- Human-in-the-loop Ensures Precision: Continuous expert feedback maintains domain-specific accuracy in technical, legal, and specialised workflows.

- Responsible ASR Builds Trust: Fairness, explainability, privacy, and accountability are critical to meet regulatory requirements and maintain stakeholder confidence.

1. Truly Multilingual and Adaptive Speech Models

India’s e-commerce industry is projected to reach Rs. 29,88,735 crore, reflecting the volume and linguistic diversity of customer interactions. Enterprises need ASR systems that move beyond English, as most platforms still struggle with regional dialects, low-resource languages, and code-switched speech common in India.

Advances in end-to-end ASR architectures, transfer learning, and self-supervised learning are enabling future systems to:

- Automatically adapt to new languages, accents, and dialects

- Handle code-switching within sentences or conversations, common in bilingual business communications

- Exploit cross-lingual patterns to improve performance for low-resource languages

- Learn from limited annotated data to reduce deployment costs and speed up adoption

Key Enterprise Use Cases:

- E-commerce: Customer support in regional languages and multilingual chatbots

- Healthcare: Accurate patient documentation in local dialects, ensuring compliance and care continuity

- Education: Bilingual or multilingual learning platforms for diverse learners

- Legal: Court transcription and compliance across multiple languages and accents

Real‑world Example: The Delhi High Court launched its first pilot hybrid courtroom with speech-to-text transcription. The system converts evidence and spoken testimony into text in real time, improving efficiency and reducing reliance on stenographers.

Understanding the language complexity and accuracy requirements of each use case is essential when selecting an enterprise speech-to-text solution. Platforms such as Reverie’s STT API are designed to address these needs by supporting Indian languages, dialectal variations, and mixed-language speech across critical business workflows.

Next, we explore how enriched and standardised ASR outputs make spoken content immediately actionable for enterprise analytics and workflows.

2. Rich and Standardised Output Beyond Text

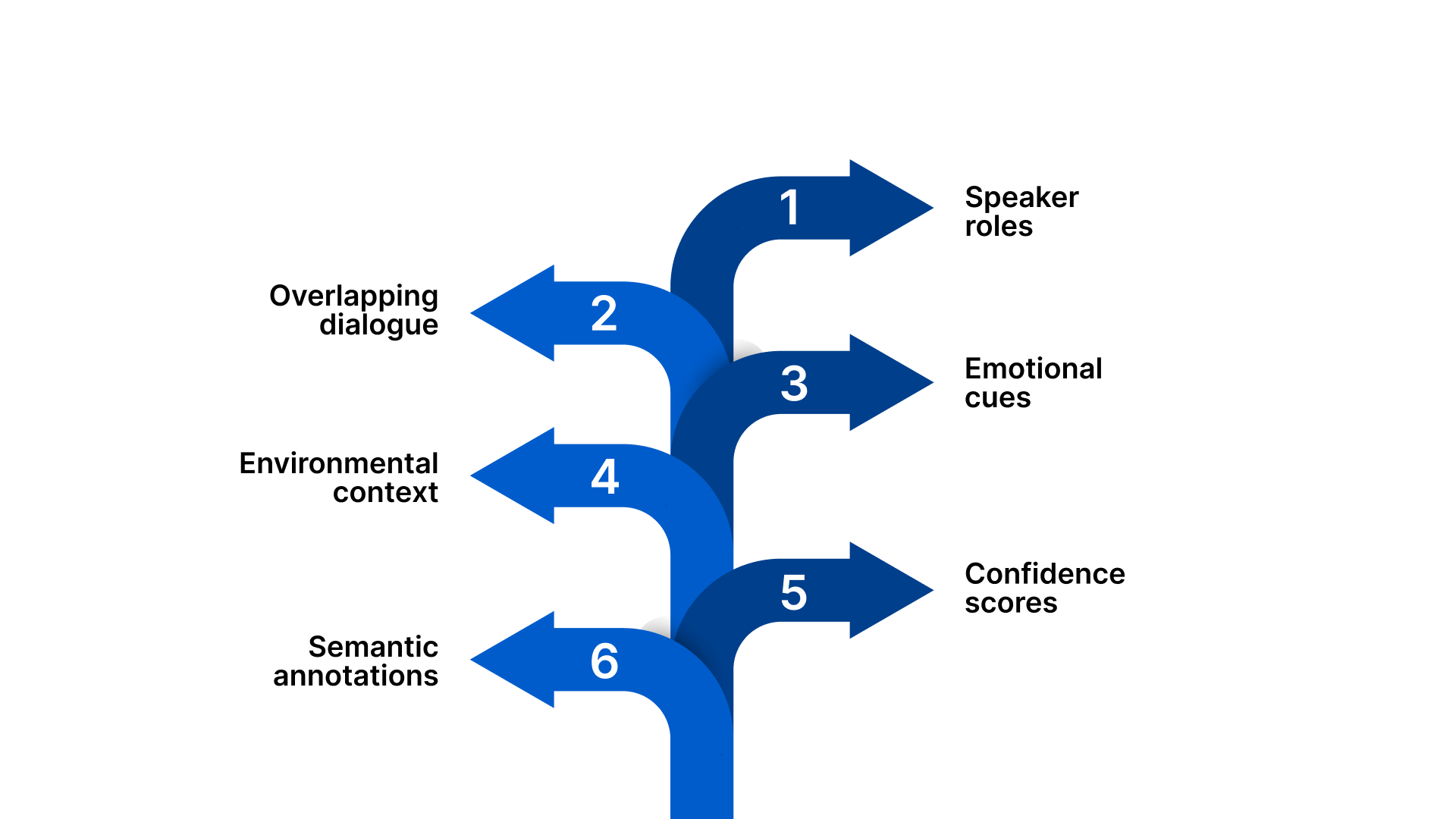

Next-generation ASR will go beyond plain text transcription. Enterprises increasingly require structured and enriched outputs to integrate spoken content directly into analytics platforms, compliance systems, and knowledge management workflows.

Modern ASR outputs are expected to include:

- Speaker roles: Identify speakers in multi-participant calls or meetings

- Overlapping dialogue: Capture simultaneous speech for accurate context

- Emotional cues: Detect sentiment, tone, and stress levels

- Environmental context: Differentiate speech from background noise to improve reliability

- Confidence scores: Provide quantitative reliability for downstream decision-making

- Semantic annotations: Tag topics, entities, and intent to automate processing

Real‑world Example: A leading Indian life insurer used AI‑powered speech analytics to extract sentiment, tone, and language patterns from call centre interactions, reducing repeat calls, improving agent responses, and boosting operational efficiency beyond simple transcription.

Key Enterprise Applications:

- Automated summarisation: Generate concise executive meeting notes

- Enhanced search & indexing: Make audio/video content discoverable in knowledge systems

- Contextual analytics: Extract actionable insights from customer interactions

- Legal & compliance: Tag evidence and categorise cases for audits or court documentation

Adoption of common output standards (e.g., W3C or Open Voice Transcription Standard) will allow enterprises to integrate ASR outputs across multiple vendors and platforms, reducing custom adaptation and enabling scalable deployment.

Also Read: What is Language Translation and How Does It Work

With rich, standardised outputs in place, ASR can become a core tool across enterprise pipelines, supporting actionable insights at scale.

3. ASR at Scale Across Enterprise Pipelines

As ASR becomes affordable, reliable, and fast, its deployment will expand beyond niche use cases to become a core enterprise infrastructure capability. Scalable ASR enables organisations to process massive volumes of voice data across operations, training, and compliance workflows.

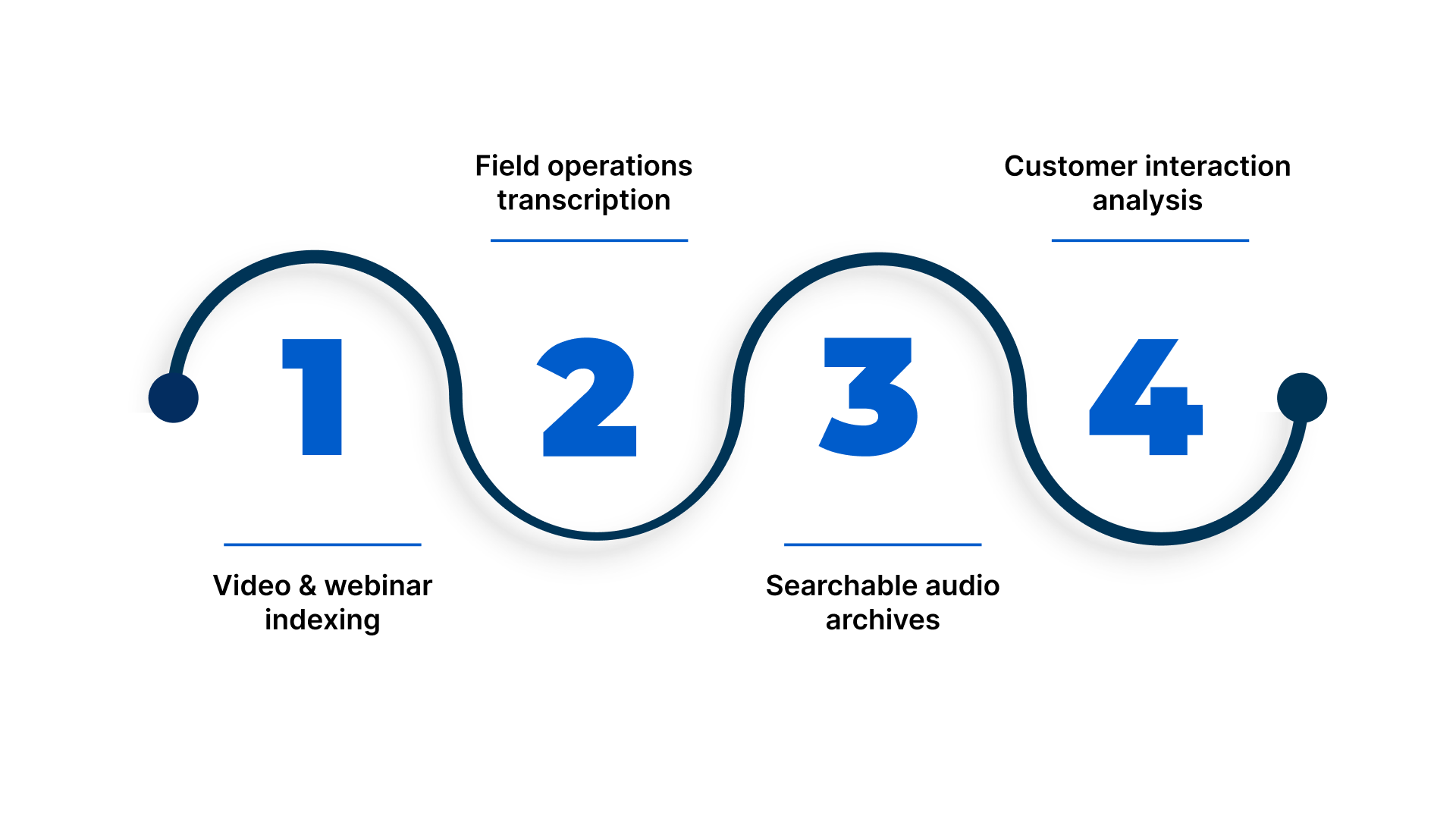

Key Enterprise Applications at Scale:

- Video and webinar indexing: Organise corporate learning content and ensure regulatory compliance

- Field operations transcription: Record industrial processes, safety checks, and audit trails in real time

- Searchable audio archives: Make legal depositions, client interviews, and meeting recordings instantly retrievable

- Customer interaction analysis: Extract insights from multi-channel e-commerce calls and support centres

How ASR Scales:

- Real-time streaming transcription for live meetings and calls

- Batch processing for large audio/video repositories

- Cloud and on-prem deployment options to meet performance and compliance requirements

- Seamless integration with enterprise APIs, analytics platforms, and BI systems

Real‑world Example: In 2025, Apollo Hospitals announced increased investment in AI tools to transcribe doctor notes, generate discharge summaries, and support clinical documentation across its network of more than 10,000 beds, aiming to reduce clinician workload and improve operational efficiency.

Scaling ASR enables enterprises to convert audio and video into searchable data, reduce human transcription costs, accelerate compliance, and drive data-powered decision-making.

Also Read: Top Use Cases for AI Voice Agents in Retail and E-Commerce

However, scaling alone is not enough; continuous improvement through human-machine collaboration is essential to maintain high-quality outputs in domain-specific contexts.

4. Human-Machine Collaboration for Continuous Improvement

Even with advanced ASR, enterprises rely on human-in-the-loop (HITL) processes to maintain accuracy, especially in domains with specialised vocabulary. Human review and feedback loops are central to continuous model improvement, ensuring enterprise ASR systems remain reliable as language, accents, and jargon evolve.

Key Enterprise Use Cases:

- Healthcare: Recognising clinical terminology, drug names, and abbreviations

- Automotive automation: Understanding technical diagnostics and service reports

- Legal: Parsing legalese, statutes, and case-specific references

- Education: Adapting to subject-specific or multilingual lexicons

How HITL Works:

- Human reviewers validate outputs in real time or post-processing

- Corrections feed into model retraining to improve future accuracy

- Domain experts guide vocabulary expansion and contextual understanding

- Continuous feedback loops allow ASR models to self-adapt to evolving enterprise language

Real‑world Example: In 2025, hospitals including Breach Candy, NM Medical, and Apollo used AI transcription with clinician review to convert dictations into structured medical records. Human feedback improved accuracy for clinical terms and multilingual notes, reducing documentation time and errors.

Also Read: The Role of Speech-to-Text in Healthcare for Faster Clinical Workflows

By embedding HITL processes, enterprises can maintain high-quality, domain-specific ASR, reduce errors in critical workflows, and ensure automated transcription and analytics remain reliable over time.

With accuracy and adaptability ensured, enterprises must also ensure responsible and ethical ASR deployment to protect data and trust.

5. Responsible, Fair, and Accountable ASR

As enterprises deploy ASR at scale, responsible AI principles must guide system design and usage. Voice data is sensitive, making fairness, transparency, and accountability essential for regulatory compliance, client trust, and operational reliability.

Core Principles:

- Fairness: Ensure consistent performance across demographic groups, accents, and languages

- Explainability: Provide clear insight into why specific transcripts or outputs were generated

- Privacy: Protect personally identifiable voice data in compliance with global regulations and India’s emerging data protection frameworks

- Accountability: Monitor ASR performance, maintain audit trails, and measure business impact

Real‑world Example: HDFC Bank warned customers about AI voice cloning and deepfake fraud, highlighting the need for secure, fair, and responsible handling of enterprise voice data at scale.

Adhering to these principles is critical. Regulators, clients, and enterprise partners increasingly expect transparent, ethical, and auditable AI solutions that safeguard sensitive voice data and maintain operational trust.

Responsible ASR enables enterprises to scale voice-driven operations confidently while ensuring fairness, compliance, and ethical use.

Conclusion

Enterprises today face a growing challenge: massive volumes of voice data remain underutilised due to manual transcription, slow turnaround, and inconsistent accuracy. Implementing advanced speech recognition systems that are multilingual, richly structured, scalable, and responsible enables organisations to transform spoken content into actionable insights, streamline operations, and ensure compliance across departments.

Reverie offers enterprise-grade ASR solutions designed to address these exact challenges. Our platform provides accurate, real-time transcription, supports multiple languages and dialects, and integrates seamlessly with business workflows, helping enterprises achieve greater efficiency, enhance accessibility, and enable data-driven decision-making.

So why wait anymore? Sign up for a free trial today and experience how Reverie’s precise, real-time transcription can enhance your business operations.

FAQs

1. How can enterprises ensure ASR accuracy in domain-specific contexts?

Enterprises should combine pre-trained ASR models with domain-adapted vocabulary and HITL processes. By continuously validating outputs and retraining models on specialised terminology, organisations maintain high transcription accuracy across sectors like legal, healthcare, and technical operations.

2. What is the impact of ASR on compliance and audit processes?

ASR enables automatic transcription of meetings, calls, and field operations, producing timestamped, searchable records. These transcripts facilitate regulatory compliance, support audits, reduce manual effort, and enable faster responses to legal or operational inquiries.

3. How do multilingual ASR systems handle code-switching?

Advanced ASR models leverage cross-lingual training and contextual prediction to detect language transitions mid-sentence. This ensures accurate transcription in multilingual environments, which is critical in regions like India, where enterprise operations involve extensive bilingual communication.

4. Can ASR outputs be integrated with analytics platforms?

Yes. Rich, standardised ASR outputs include metadata such as speaker roles, sentiment, and semantic tags. Enterprises can feed this data into analytics, knowledge management, and BI systems to extract actionable insights from voice interactions at scale.

5. What measures protect voice data privacy in ASR deployments?

Enterprises should implement end-to-end encryption, on-premises or edge processing, and access controls. Compliance with frameworks such as India’s PDP Bill or GDPR ensures that sensitive voice data is stored, processed, and audited securely while supporting enterprise-scale deployments.