Did you know that India’s linguistic diversity spans 22 official languages and over 453 living languages and dialects? This makes accurate speech recognition a major challenge for enterprises.

Systems that are not designed for multilingual and mixed-language environments struggle with regional accents and often miss emotional cues such as frustration, urgency, and satisfaction. This gap weakens analytics, limits understanding of intent, and prevents enterprises from responding effectively to real customer sentiment at scale.

In this blog, you’ll explore why speech emotion recognition is essential for enterprise voice data, built for Indian languages, real-world scale, and seamless integration.

Key Takeaways

- Emotion-Aware Insights: SER transforms raw voice into actionable emotional intelligence, enabling smarter customer and employee engagement.

- Multilingual Capability: Indian enterprises benefit from models trained on regional languages and code-switched speech.

- Workflow Efficiency: Integrating SER into workflows reduces response times, escalations, and churn while improving customer satisfaction.

- Real-Time Application: SER supports live and batch processing, making it suitable for call centres, apps, and voice platforms.

- Seamless Integration: Outputs from SER can feed analytics dashboards, automation workflows, CRM systems, and business intelligence tools.

What is Speech Emotion Recognition?

Speech Emotion Recognition (SER) identifies a speaker’s emotional state by analysing vocal signals such as tone, pitch, speed, and intensity. It extends beyond transcription by directly detecting emotions such as happiness, frustration, urgency, and satisfaction from speech.

SER can be applied across call centres, IVR systems, apps, and voice-enabled platforms to measure customer sentiment, detect agent stress, and enhance engagement. By interpreting subtle vocal cues, businesses can make data-driven decisions, improve customer experience, and refine automated responses to human emotions.

Now, let’s explore why SER matters for Indian enterprises, especially those handling multilingual and code-switched voice data.

Why Speech Emotion Recognition Matters for Indian Enterprises

Ignoring emotional context means enterprises lose critical insights into customer satisfaction, service quality, agent behaviour, escalation risk, churn, and engagement. In India’s voice-first market, millions interact daily through call centres, IVR systems, and voice assistants, making emotion a key factor in turning voice data into actionable insights.

Below are the primary ways speech emotion recognition drives measurable business impact:

- Customer Satisfaction & Service Quality: Detect emotional cues to measure satisfaction, identify service gaps, and improve overall experience across multiple languages.

- Agent Behaviour & Training: Analyse tone and stress levels to optimise agent performance and design better training for call centre and field staff.

- Escalation Risk & Churn Signals: Identify frustration, urgency, or dissatisfaction in real time to trigger smarter escalations and reduce churn.

- Trust and Engagement Levels: Understand emotional engagement to maintain customer trust and strengthen long-term relationships.

- Emotion-Aware Automation: Feed emotional insights into workflows and AI systems for smarter, context-sensitive automation.

Turn Voice Data into Actionable Insights with Emotion Recognition

Improve CSAT by up to 52% using Reverie’s enterprise Speech-to-Text.

Extracting emotional cues from speech turns raw audio into actionable intelligence, enhancing CX and operations. Now, let’s see how speech emotion recognition works in practice.

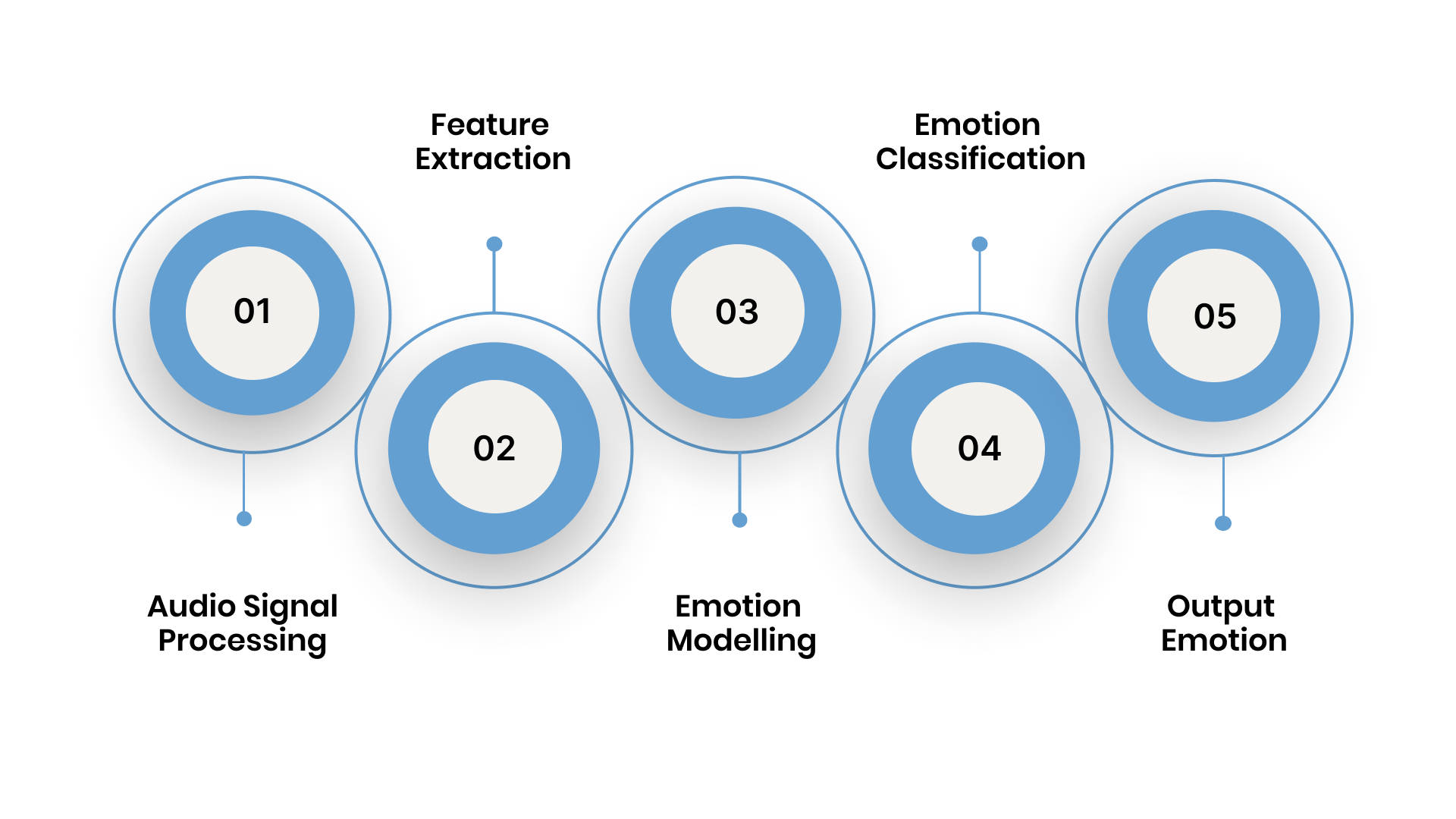

How Speech Emotion Recognition Works

At a high level, speech emotion recognition systems process raw audio through a multi-stage pipeline, converting it into structured emotional intelligence. This approach drives smarter decisions and improves customer experience across multilingual environments. Here’s a closer look at each stage of the process:

1. Audio Signal Processing

The system captures audio from call centres, IVR, voice assistants, and other enterprise channels. It cleans and normalises recordings, supporting both live and batch processing to ensure high-quality input for accurate emotion detection.

2. Feature Extraction

Audio is transformed into machine-readable features that capture emotional cues such as pitch, energy, speech rate, and timbre. These elements capture stress, urgency, or engagement, forming the foundation for detecting subtle emotions across multilingual and mixed-language speech.

3. Emotion Modelling

The extracted features are analysed using machine learning models such as CNNs, RNNs, LSTMs, Transformers, or hybrid approaches combining acoustic and text-based cues. These models capture spatial, temporal, and contextual patterns in speech. They are trained to recognise emotions across multilingual and code-switched conversations.

4. Emotion Classification

Model outputs are mapped to predefined emotion categories such as anger, frustration, neutral, or happiness. This categorisation enables enterprises to prioritise responses, optimise agent performance, and trigger automated actions. Real-time classification ensures issues are addressed promptly, enhancing engagement and satisfaction.

5. Output Emotion

The final emotion data is structured and delivered for integration into enterprise systems. It powers analytics dashboards, automation workflows, agent coaching, and business intelligence tools. This transforms raw voice data into actionable intelligence for informed decision-making and workflow efficiency.

Also Read: What is Language Translation and How Does It Work

By following this pipeline, enterprises can convert raw voice into structured emotional intelligence, improving decision-making across Indian-language contexts. Now, let’s see how this SER drives measurable impact across different industries.

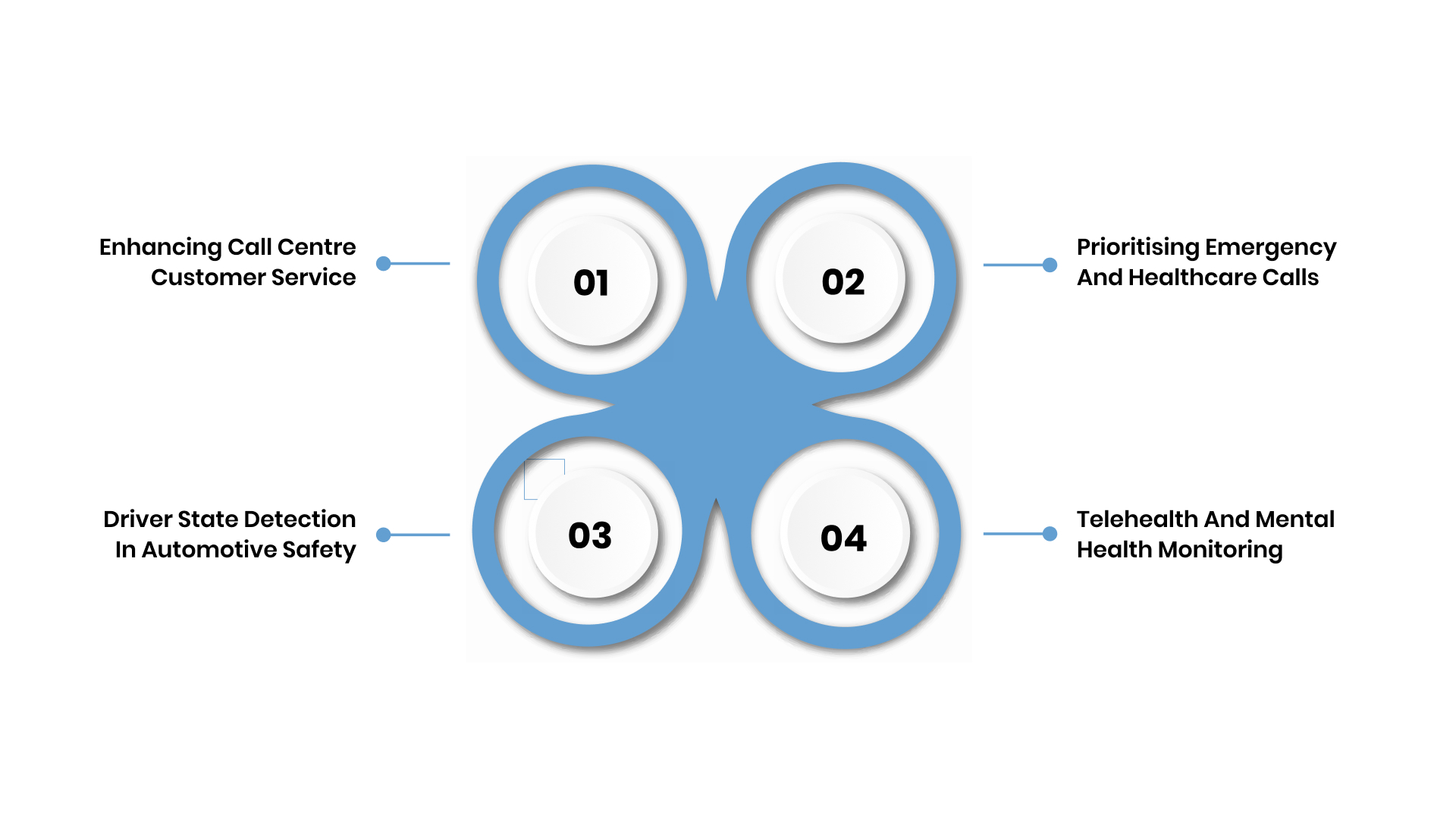

Top 4 Use Cases for Speech Emotion Recognition

Speech Emotion Recognition reveals insights from voice interactions that directly influence how enterprises respond and operate. By detecting emotions, businesses can improve customer support, enhance safety, and optimise engagement across real-world scenarios.

Below are a few key use cases where SER drives measurable impact:

1. Enhancing Call Centre Customer Service

Call centres use emotion data to route emotionally intense calls to senior agents, improving customer satisfaction and first‑contact resolution. According to research, detecting emotions in call centre speech helps assess service quality and agent performance, which companies use to optimise training and support strategies.

2. Prioritising Emergency and Healthcare Calls

Emergency call centres can use SER to flag emotionally critical calls (e.g., fear or panic) to dispatch support more quickly, reducing response times and potentially saving lives. A study notes the use of SER applications in emergency call settings to identify hazardous situations.

3. Telehealth and Mental Health Monitoring

In healthcare, SER can support clinicians by identifying signs of anxiety, depression, or distress through speech patterns, aiding early intervention and continuous patient monitoring. Studies on attention‑based SER models show potential for mental health applications by detecting varied emotional states from speech.

4. Driver State Detection in Automotive Safety

Emotion detection from in‑vehicle speech can identify driver states such as stress, distraction, or fatigue, triggering safety alerts or corrective measures. SER is increasingly applied in automotive and smart systems to enhance safety by incorporating emotional context from voice.

Analyse Emotions in Real Time for Smarter Decisions

Reduce operational costs by up to 62% with Reverie’s Speech-to-Text APIs.

Also Read: Top Use Cases for AI Voice Agents in Retail and E-Commerce

Next, let’s look at how organisations can implement speech emotion recognition through a structured, step-by-step approach.

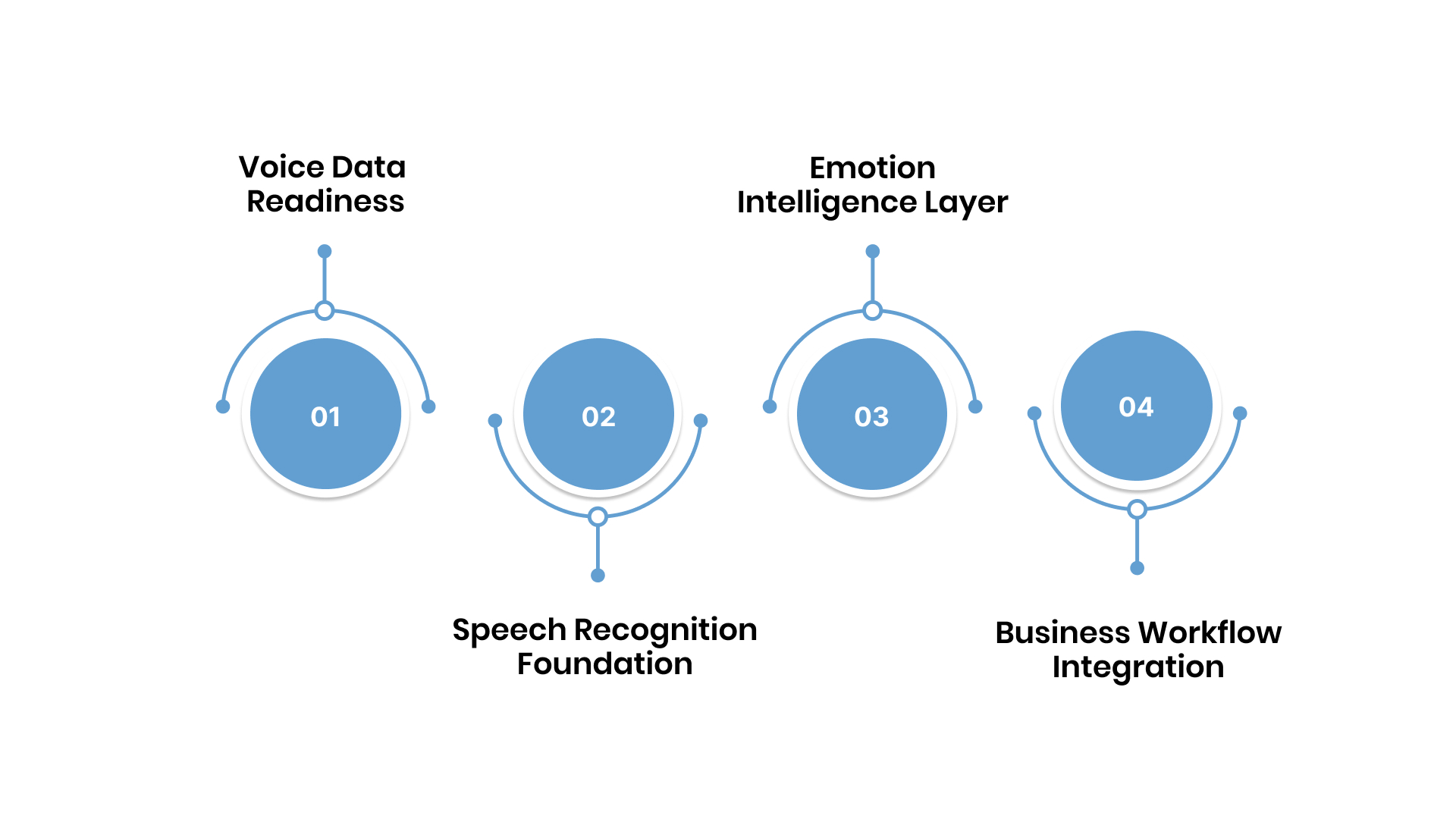

4 Key Steps to Implement Speech Emotion Recognition

For organisations adopting emotion-aware voice intelligence, a phased approach ensures seamless implementation and measurable returns. The following steps outline how to effectively integrate SER into your operations:

Step 1: Voice Data Readiness

Begin by identifying all relevant voice channels, including call centres and IVR systems, as well as apps and voice assistants. Ensure that audio quality is sufficient for analysis, establish consent and compliance, and define structured data pipelines to feed downstream systems.

Step 2: Speech Recognition Foundation

Deploy a reliable multilingual speech-to-text solution capable of streaming and batch processing to handle live and recorded audio. Build analytics dashboards to monitor transcription accuracy, voice patterns, and overall system performance before layering emotion intelligence.

Step 3: Emotion Intelligence Layer

Integrate emotion detection APIs and train domain-specific models to capture relevant emotional cues. Develop emotion scoring frameworks and define rules for triggering alerts or automating workflows based on emotional insights.

Step 4: Business Workflow Integration

Connect emotion-aware insights to operational systems such as CRM or customer support platforms. Enable alerting, routing, and reporting with KPIs, and train operational teams to apply emotional intelligence to improve decision-making and the customer experience.

Looking to convert voice interactions into measurable business growth? Enterprises using Reverie’s Speech-to-Text API have achieved 2.5× growth in lead generation by processing high-volume voice data with speed and accuracy. Get started today and turn every conversation into a growth opportunity.

Let’s now look ahead at the future of speech emotion recognition and how it will define enterprise voice interactions in India.

Future of Speech Emotion Recognition in India

As voice becomes the primary interface for digital services in India, emotion recognition is set to become a core capability rather than a specialised feature. Enterprises will increasingly rely on emotion-aware systems to make interactions more natural, contextual, and operationally intelligent.

Below are a few emerging trends in speech emotion recognition:

- Multimodal Emotion Analysis: Combining speech with video and physiological signals to capture richer emotional context.

- Model Generalisation: Creating models that perform reliably across diverse populations, languages, and domains.

- Learning from Limited Data: Developing techniques that require fewer labelled examples while maintaining accuracy.

- Advanced Deep Learning: Using neural networks for improved feature representation and subtle emotion detection.

- Practical and Ethical Focus: Enhancing robustness, interpretability, and fairness to ensure real-world applicability.

These advancements enable emotion-aware systems for conversational AI, adaptive IVR, mental health monitoring, personalised experiences, and predictive analytics, improving operational intelligence, customer engagement, and decision-making across India.

Also Read: Power of Speech to Text API: A Game Changer for Content Creation

Now, let’s explore how Reverie’s speech recognition API transforms enterprise voice data into accurate transcripts in Indian languages, enabling actionable insights and smarter operations.

Reverie’s Automatic Speech Recognition Model Simplified

An effective speech recognition system only adds value when voice is turned into actionable insights. Reverie’s ASR model converts enterprise voice data into accurate text across Indian languages, enabling real-time transcription of calls, meetings, and recordings for meaningful analysis.

Here’s how it enhances workflow:

- Transcription in 11 Indian Languages: Seamlessly convert conversations, including regional or mixed-language content, into correctly punctuated text.

- Real-time and Batch Processing: Monitor calls live or process large volumes of audio later, giving you flexibility in how you analyse voice data.

- Voice Typing and Command Support: Enable users to create text by speaking or to invoke actions via voice commands.

- Smooth Integration and Developer Support: Use APIs or SDKs with clear documentation and a testing playground to integrate speech transcription into your CRM, contact center, or internal systems.

- Data Security & Privacy Compliance: Reverie encrypts data and adheres to strong privacy standards, essential for sectors such as legal, healthcare, and finance.

Ready to turn calls into actionable insights? Instantly transcribe customer interactions across Indian languages with Reverie’s ASR, enabling precise analysis and smarter business decisions. Contact us to learn more!

Conclusion

Speech emotion recognition is now a production-ready capability that turns voice interactions into actionable emotional insights. Indian enterprises across sectors can use it to better understand customers, users, and employees, making emotion intelligence a natural next step for any voice-first platform.

A platform like Reverie’s Speech-to-Text API enables this transformation by integrating seamlessly into your digital ecosystem. Supporting both cloud and on-premises deployments for scalability and flexibility, it offers keyword spotting, profanity filtering, sentiment analysis, and smart analytics, all designed to enhance workflow efficiency and user experience.

Ready to optimise workflows and reduce operational costs? With Reverie’s real-time transcription and sentiment analysis, enterprises have cut costs by 62% while improving response times and customer satisfaction. Sign up today to learn more!

FAQs

1. Can Speech Emotion Recognition work with code-switched conversations in India?

Yes. Advanced SER models are trained on multilingual and code-switched speech and accurately identify emotions, even when Hindi-English or regional language blends are used.

2. How does SER improve agent training in call centres?

By analysing stress, frustration, or calmness in agent speech, SER highlights areas for coaching, helping supervisors design targeted training to enhance performance and reduce burnout.

3. Is real-time emotion detection feasible for high-volume enterprise environments?

Yes. Modern SER systems support streaming audio, batch processing, and scalable API integration, enabling real-time emotion insights across thousands of simultaneous interactions.

4. Can SER integrate with existing CRM or workflow systems?

Absolutely. SER outputs structured emotion data that can feed dashboards, alerting systems, or workflow automation tools to improve decision-making and customer response strategies.

5. How does SER support multilingual analytics in India?

By extracting acoustic features and training models across multiple Indian languages, SER provides consistent emotion insights across regional languages, dialects, and code-switched speech for comprehensive analytics.